These days, it’s hard to shake the feeling that everything is changing. Unfortunately, we cannot provide much more stability – because things are about change. This edition of SEO News for the month of October asks the question of whether the Internet as we know it will still exist in ten years, and explores what Google has planned for the next 20 years.

1) The Brave New World of Google

Major birthdays are a welcome occasion to take stock and look ahead. It’s no different for companies and institutions. The search engine Google is currently celebrating its 20th anniversary. Consequently, the Head of Search, Ben Gomes, who was promoted just a few months ago, has attempted to construct a grand narrative in the form of a blog post. Gomes’ story begins with his childhood in India, when his only access to information was a public library, a remnant of Britain’s long-vanished colonial power, and finishes with the modern search engine. Gomes suggests that personalisation, automation and relevance are the cornerstones of a quality product that, according to him, still follows the original vision: “To organize the world’s information and make it universally accessible and useful”. But is this goal being sacrificed globally on the altar of proportionality? SEO news will take up this question again below, with regard to the double standards in dealing with China.

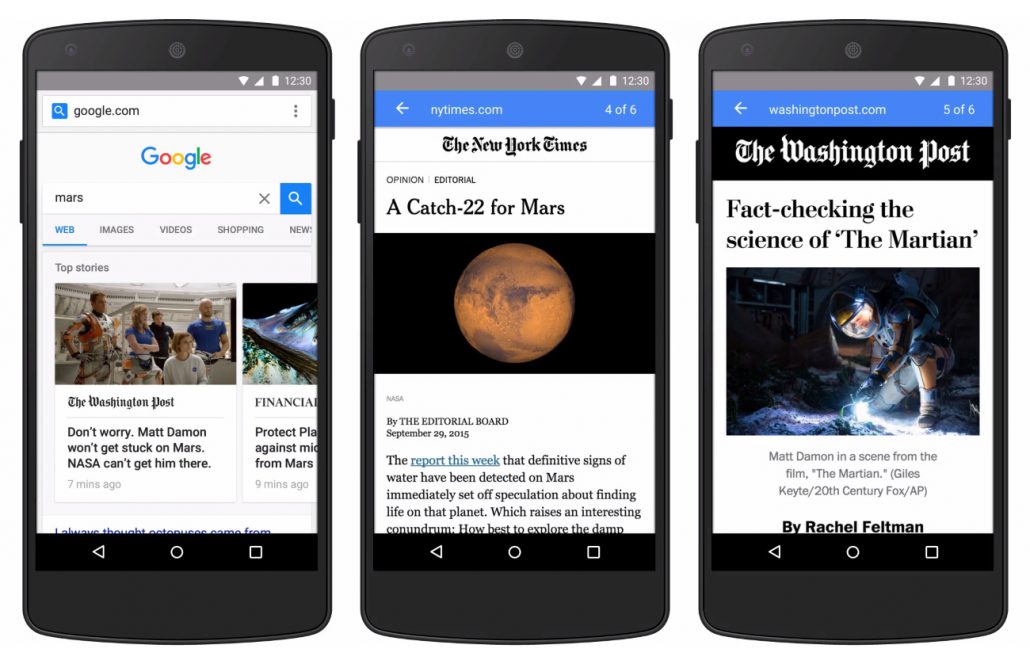

An interesting issue for everyday SEO work, however, is a paradigm shift which Gomes believes will be groundbreaking for Google over the next 20 years. The Head of Search confirms the vision of an invisible and omnipresent information, solutions and convenience machine. According to Google, the transformation to this ubiquitous service is to be followed by three fundamental processes of change. First, it’s about even stronger personalisation. At this level, Google wants to try to evolve from a situation-dependent provider of answers, into a constant companion. According to Gomes, users’ recurring information deficits and ongoing research projects will be recognised, taken up and handled. This is to be achieved, above all, by a restructuring of the user experience on the Google results page. All sorts of personalised elements will be found here in the near future to help users make their journey through the infinite information universe more efficient. The user not only gets to know themself in this process, more importantly, the search engine gets to know the user – that goes without saying.

But before any criticisms can arise, we move swiftly on to the second paradigm shift: The answer before the question.

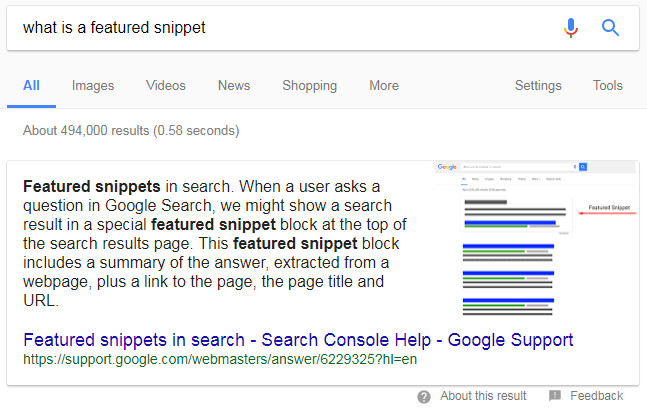

Google has set out to identify and prepare information relevant to the individual user, even before they have formulated a search query at all. The key element here is technological. Following “Artificial Intelligence” and “Deep Learning”, a technique called “Neural Matching” should be especially helpful: It links the representation expressed by text, language or image with the higher-level object or concept. This represents the continuation of the concept of semantic searches and entities with new technological concepts, and is exceptionally consistent from a business perspective.

The third pillar of the change should be a greater openness to visual information in the search systems. The visual search has great potential for users and advertisers, as we have already discussed several times before. Google is immediately taking action, introducing a complete overhaul of its image search, as well as the integration of its AI-driven image recognition technology “Lens” into the new generation of in-house “Pixel” smartphones. The interesting thing about Google’s anniversary publication is what it doesn’t mention: The voice assistant Google Home. This is a good sign that, despite all market constraints, Google is not distancing itself from its technological DNA and allowing itself to be pushed into a competition with the voice market leader Amazon. Contrary to the publicised hype, voice software is yet to create a huge stir in the search world.

2) The end of the networked world

Oh, how everything is connected: The individual, the world, technology and democracy. More and more aspects of our existence are digitised or transmitted via digital channels. In this process, it always comes back to bias. The well-known tech companies are acting as the pacesetters of this upheaval with their platforms. It may not be too long before Facebook, Amazon or Google establish themselves as the quasi-institutionalised cornerstones of our social and economic systems. Even today, the real creative power of these companies often exceeds the capabilities of existing state regulations. And search engines are at the centre of this development as a human-machine interface and mediation platform. The most relevant shopping search engine Amazon, for example, is changing not only our personal consumption habits but also the appearance of our cities and landscapes, with its radical change in the retail sector. The convenience for the consumer has resulted in empty shops in the inner cities and miles of faceless logistics loading bays in the provinces. Meanwhile, global populism has cleverly used social and informational search systems to accurately position and reinforce its messages. Facebook and Google have contributed at least partially to the sudden and massive political upheaval in one of the largest democracies in the world. Maintaining their self-image as pure technology companies, Google, Facebook and the like, however, have so far persistently refused to accept responsibility for the consequences of their actions. Apart from public repentance and the vague announcement that they are looking for “technical solutions”, they have shown little openness to adapting their strategies to the intrinsic systemic dangers. So the interesting question is: do global technology companies have to represent those values of freedom and democracy that have laid the foundation for their own rise and success in the US and Western Europe? Or can companies such as Google or Facebook be flexible depending on the market situation, and utilise their technological advantage in dubious cases in the context of censorship and repression? Currently, the state of this debate can be seen in Google’s project “Dragonfly”. Since Mountain View has refused to censor its product content, the global leader has been denied access to the world’s largest and fastest-growing market. When Google ceased all activities in China in 2010, the People’s Republic was forced to do without it, and managed pretty well. China has managed just fine without competition for its own flagships Baidu, Tencent and Alibaba. According to consistent media reports, Google has been working for several months to restart involvement in the Middle Kingdom, with the blessing of the government in Beijing. Under the working title “Dragonfly”, Google is reportedly planning to launch a Search app and a Maps app. Working closely with the Chinese authorities, and under state control and censorship, these apps are expected to pave the way for future, widespread activities for Mountain View in the People’s Republic. It just goes to show that Google is prepared to play the game, if the price is right. This approach can be seen as pragmatically and economically motivated. Particularly in light of the fact that the Chinese authorities recently granted Google’s competitor Facebook company approval, then withdrew it after only one day. Rampant discord in the West and cooperative subordination in Asia: former Google CEO Eric Schmidt outlined the consequences of this double standard a few days ago in San Francisco. Schmidt told US news channel CNBC that he expects the Internet to divide over the next decade. He predicts a split into a Chinese-dominated and a US-dominated Internet by 2028 at the latest. Apparently, Silicon Valley has already given up on the vision of a global and open network for the world. However, the consequences of this development will be felt by every individual.